Reconciling Effective Altruism and E/acc

Uniting against the AI ethics tribe

Housekeeping: To honor Turpentine’s ~1-year anniversary, we’re releasing our 1-pager master plan. Check it out here and subscribe to Turpentine company updates here.

I discussed EA and E/acc on Bankless this past week and thought I’d write out my thoughts in more long-form.

Epistemic status: speculative, not my area of expertise

Effective Altruism’s reputation has taken a massive hit in the last two years. Fairly or unfairly, the combination of SBF and the Open AI debacle made EA the butt of all jokes and the supposed root of all problems. If SBF didn’t kill EA outright, it seems like the Open AI debacle put the nail in the coffin, somehow making Sam Altman look like an E/acc hero in the process, even though he was previously criticized by E/accs for purportedly wanting regulatory capture.

As a refresher, SBF was one of the main funders (and thus faces) of EA. While EA tried to distance itself from SBF after news of his fraud emerged, SBF was as EA as EA gets. It was as if EA bred him in a lab. He had been a loyal EA follower since 2012, and he had dedicated his life to EA causes. Crypto was just a way for SBF to fund his EA goals. And he made money in the most EA way possible: arbitrage. EA is all about arbitraging philanthropically — if giving one dollar can save 100 lives in Africa whereas only one in America, then it’s worth redirecting that money to Africa. And so it’s no coincidence SBF made his initial money by arbitraging the price of bitcoin in Japan.

EA’s arbitrage as it relates to philanthropy, in my opinion, is one of its biggest accomplishments. The trillions of dollars spent in philanthropy and the non-profit sector is so emotionally driven that its outcomes are terrible. And EA in many ways has delivered the goods on the philanthropic front. While many people criticize EA, it’s also worth acknowledging its wins: Like saving 200,000 lives. Or ending the torture of hundreds of millions of animals. Or preventing future pandemics.

EA did this by deploying a ‘Moneyball’ approach to charity — evaluating philanthropy the same way you’d evaluate business investments.

EA basically then scales that idea up and says can you apply that philosophy to all of humanity. And this is where we get EA’s other major contribution: long termism. It’s not enough to think about a person's life in America vs a persons’ life in Africa. We should also think about future people too, and value these unborn future people as much as we value people living today. And make decisions with that calculus in mind.

The critique of that has always been the same as the critique of utilitarianism, which is that you get into a level of abstraction where you basically start to play God. And you start to think that you can put things in a spreadsheet that extrapolate out hundreds of years in the future with huge numbers of variables. You start to think that you can re-engineer society on that basis.

And SBF is a famous example of where this utilitarianism goes wrong—and he was showing the extremity of his philosophy in broad daylight. There’s this famous Tyler Cowen interview with SBF where Tyler says, suppose you could roll the dice and with 51 percent odds, you’d get another earth. But with 49 percent probability, you would lose the one earth that you have — do you roll the dice? And Sam’s like, from an EV perspective, of course you roll the dice. And then Tyler’s next question is, do you roll the dice again? And SBF says of course, you keep rolling the dice as long as it makes sense from an EV perspective. And so SBF literally applies this EV philosophy to running a crypto exchange. He kept rolling the dice, even when it became incredibly risky (and fraudulent) to do so. He was trying to optimize the future of all humanity by trying to make a trillion dollars so that he would end up being able to solve all the world’s problems. He almost did it, too! But alas, it didn’t quite work out in the end. Caroline, that snitch! Just kidding.

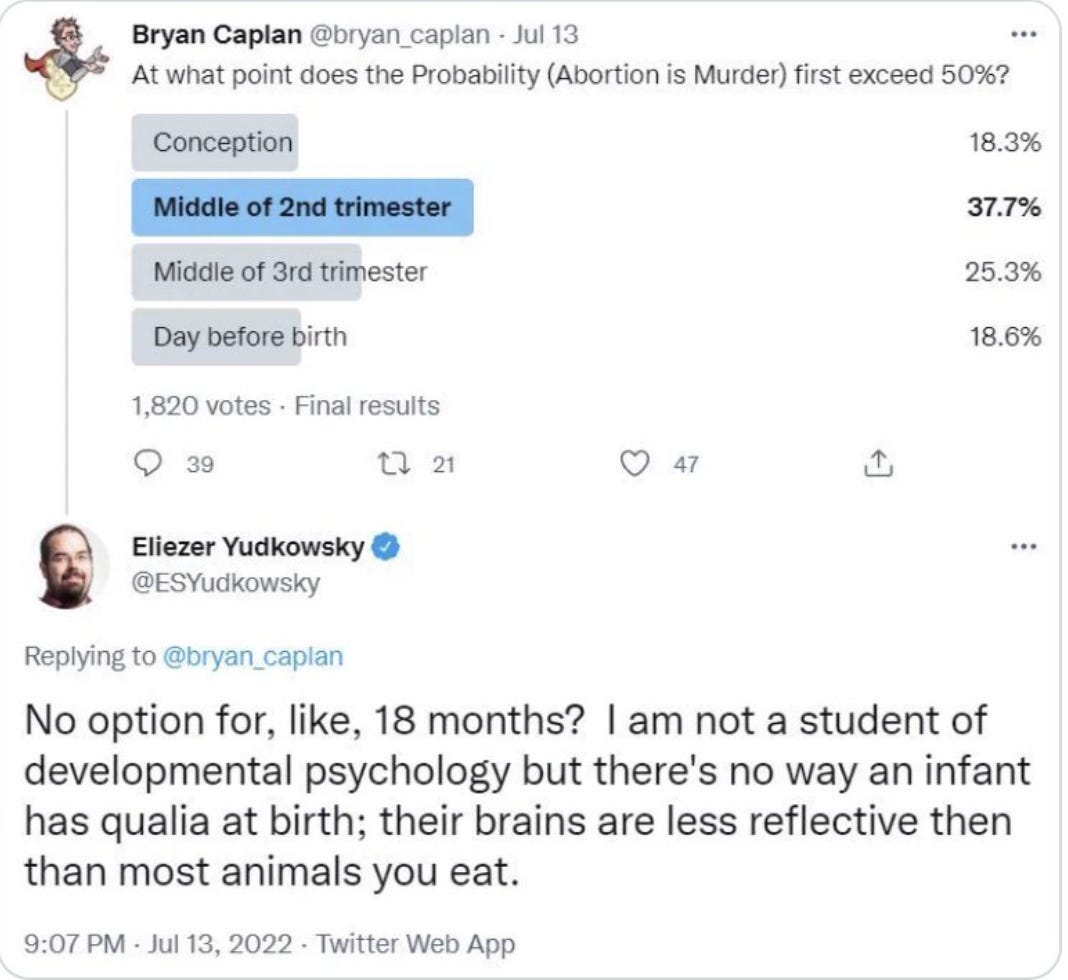

SBF isn’t the only person affiliated with EA who got in trouble for taking utilitarianism to its most extreme logical conclusion. Eliezer Yudkowsky has also shared some repugnant conclusions of his own:

The problem with ethics by Excel spreadsheet, to paraphrase Antonio Garcia Martinez, is that at some point you need the moral infinities that you put into the spreadsheet. Why don't you dice up newborns and harvest them for organs? Well, because Christianity says they're made in the image of God. Or secular liberalism says they have human rights. Which is two different ways of saying the exact same thing: humans are special in ways that the math can’t account for. And we can't just rob them of life, even if the math works out.

Utilitarianism isn’t a catch-all because the simple math tradeoffs only apply to the most trivial of problems. The real problems—do we not have lockdowns and suffer old people dying so the young maybe go to school?—aren't soluble via 'hedon' calculations a la Bentham.

Another critique of EA is that it insists on making everything legible — EA views illegibility as a problem to be solved, not as a fundamental condition of the universe. Of course, as we know from Goodhart’s law, once a measure becomes a target, it ceases to be a good measure.

Relatedly, a lot of innovation can’t be identified in advance. You can’t centrally plan it for the same reasons you can’t centrally plan an economy. Michael Nielsen explained this well:

If you'd been an effective altruist in the 1660s trying to decide whether or not to fund Isaac Newton, the theologian and astrologer and alchemist, he had no legible project at all. It would have looked just very strange. You would have had no way of making any sense of what he was doing in terms of sort of an EV point of view, you know, he was laying the foundations for a worldview that would enable the industrial revolution and a complete transformation in what humanity was about.

That's true for a lot of the things that have been the most impactful things only made sense. After they were done, sometimes a long time after they were done, we didn't understand the printing press until after it was done. We certainly didn't understand writing or the alphabet, what those things meant until a long time after I don't think I'm making much sense based on the expression on your on your face, I'm trying to say, I think of expected value calculations as things that you do when you understand your state space really well and when, but most of the things which have mattered the most.

Of course, there are large numbers of examples where central planners thought they were making the world a better place, and -- surprise surprise -- they weren't. We’ve spent trillions of dollars in the last 60 years trying to reduce inequality with welfare, and it’s been a disaster: The family formation rate has gotten significantly worse, we have a massive prison problem, and disparities haven’t been reduced. It’s like the Seeing Like a State concept: We've tried to mess with a complex system, not understanding how it operates, and instead introduced new second order effects that are even worse than the original problem.

And EA is often about top-down central planning and rule by committee. Imagine trying to argue for a new radical risky project on Less Wrong or Reddit. All of the Rational Discussion and Debate is just a thinly veiled rule by committee—and nothing great was ever built by a committee.

Another critique is EA’s insistence on impartiality. Implicit in the moral arbitrage we mentioned earlier, where one dollar can more greatly improve an African person’s life vs an American person's life, is an assumption that we should be impartial across geographic space and time. People living across the world, the logic goes, shouldn’t be any less important than people living next door. Or even people who aren’t even born yet.

This of course is highly unnatural. We were raised in families and small tribes. It makes sense we would care about our tribe more than someone we’ve never met. But you can make a practical argument for localism too: The reason you should give locally isn't only because you care more about your kid than you do about the kid in the poor country who needs the bed net. It's also because you can actually find out what the effect is of your intervention. You can see whether your kid's happy. You can see whether your intervention is working. A bottoms up emergence utilizes local knowledge in a way that tops-down planning can never do because it doesn’t have access to all the information. It’s Hayek’s market theory applied to philanthropy.

Tyler Cowen explains the tension with impartiality well:

Let me just give you a simple example. I gave this to Will McCaskill in my podcast with him, and I don't think he had any good answer to my question. And I said to Will, well, Will, let's say aliens were invading the earth and they were going to take us over, in some way enslave us or kill us and turn over all of our resources to their own ends. I said, would you fight on our side or would you first sit down and make a calculation as to whether the aliens would be happier using those resources than we would be? Now, Will, I think, didn't actually have an answer to this question. As an actual psychological fact, virtually all of us would fight on the side of the humans, even assuming we knew nothing about the aliens or even if we somehow knew, well, they would be happier ruling over and enslaved planet earth than we would be happy doing whatever we would do with planet earth. But there's simply in our moral decisions some inescapable partiality.

And let me bring this back to a very well-known philosophic conundrum taken from Derek Parfit, namely the repugnant conclusion. Now Parfit's repugnant conclusion asks the question, which I think you're all familiar with, like should we prefer a world of 200 billion individuals who have lives as rich as Goethe, Beethoven, whoever your exemplars might be, or should we prefer a world of many, many trillions, make it as asymptotically large as you need to be, many, many trillions of people, but living at the barest of levels that make life worth living, Parfit referred to them as lives of musak and potatoes. So in Parfit's vision of these lives, you wake up when you're born, you hear a little bit of musak, maybe it's slightly above average musak, they feed you a spoonful of potatoes, which you enjoy, and then you perish. Now most people, because of Parfit's mere addition principle, would admit there's some value in having this life of musak and potatoes compared to no life at all, but if you think through mathematics, if you add up enough of those lives, it would seem a sufficiently large number of those lives is a better world than like the 200 billion people living like Goethe.

I think the so-called answer, if you would call it that, some would call it a non-answer, but it's Hume's observation that we cannot help but be somewhat partial and prefer the lives of the recognizably human entities, the 200 billion Goethes. We side against the repugnant conclusion. There's not actually some formally correct utility calculation that should push us in the opposite direction.

It’s these kinds of repugnant conclusions that contribute to EA taking so much hate — and in so many contradictory ways. While no one can seem to agree on why they hate EA, everyone can agree that they hate it. Hating EA is a rallying and uniting force. It gets the people going. From a Scott Alexander piece:

“Search “effective altruism” on social media right now, and it’s pretty grim.

Socialists think we’re sociopathic Randroid money-obsessed Silicon Valley hypercapitalists.

But Silicon Valley thinks we’re all overregulation-loving authoritarian communist bureaucrats.

The right thinks we’re all woke SJW extremists.

But the left thinks we’re all fascist white supremacists.

The anti-AI people think we’re the PR arm of AI companies, helping hype their products by saying they’re superintelligent at this very moment.

But the pro-AI people think we want to ban all AI research forever and nationalize all tech companies.

The hippies think we’re a totalizing ideology so hyper-obsessed with ethics that we never have fun or live normal human lives.

But the zealots think we’re a grift who only pretend to care about about charity, while we really spend all of our time feasting in castles.

The bigshots think we’re naive children who fall apart at our first contact with real-world politics.

But the journalists think we’re a sinister conspiracy that has “taken over Washington” and have the whole Democratic Party in our pocket.

EA is indeed a punching bag. I think it’s because it lets itself be. EA people don’t defend themselves with the urgency to justify being treated any other way. They’re just not built for it. They don’t have that dawg in them. Their swag is insufficiently differentiated. Their smoke isn’t tough enough. The vibes are off. You get the idea: EA people are incredibly bright, curious, polymathic, first-principled, into alternative lifestyles, high openness, psychedelics, etc, but also beta and cucked and not up for a fight and easy pickings for attackers. Like libertarians before them, EAs just want to make arguments, they don’t actually want to do the dirty work of self-defense.

And this is where E/acc comes in, because they are the foils to EA in many ways, including their approach to public relations, though it’s first worth mentioning that EA and E/acc agree on….almost everything. Like E/accs, EAs are also accelerationist on nearly everything other than AI safety — self-driving cars, longevity, nuclear power — they’re basically transhumanists. Ironically, Effective Altruists are the ones that have have been criticized the past decade for being too accelerationist, for thinking that everything can be solved by technology and economic growth

EAs and E/accs mostly just disagree on the topic of AI safety (which I’ll further flesh out in a future post). Now, AI safety is a big topic, it’s THE topic of the moment, but it's still worth noting that there’s agreement on nearly everything else. Calling EAs doomers or decels is effective in destroying their reputation, but it belies the fact that EAs are mostly techno-optimists and tech accelerationists.

BUT there is one other thing they disagree on but they both don’t want to say it. EA is, in practice, a democratic party affiliated movement. It’s an Anti-Trump movement. Most EAs would scoff at this, given that they are supposed to be first principled. And they’re mostly libertarian. But there are no open Trump EAs that I can see. While they don’t love Biden either, they’ll vote for him. Out of the many thousands of EAs, you’d expect some intellectual diversity? Most EAs are not politically tribal, but none will publicly say they vote for Trump. And EA funders are very large democrat donors. Richard Hanania wrote about how EA is woke. Another EA wrote about how EA sunk $200M down the drain for ‘prison reform’ only to maybe make things worse.

To quote Antonio again, “It's shocking how the math always works out to actually support the average midwit liberal Democrats view….Somehow the math always works out to support what is pretty normie Democratic Party beliefs. It’s amazing how the math just works out that way, right? “

E/acc by contrast is a (implicit) right-coded movement. It’s a way for techies to code as right wing without conceding that they affiliate with Trump or the proles that support him. Elon will explicitly not say he’s right wing, but the issues he draws attention to — immigration concerns, voter fraud, trans/social issues, anti-DEI — are all at least right-coded.

As mentioned, E/acc’s approach to public relations is radically different than EA’s approach. EA says “let’s have a polite debate”. E/acc says “fuck you, let’s build”. E/acc offers unequivocal defenses of tech. EA hears someone call them a tech bro and tries to prove that they’re not like the tech bro stereotype. Nat Friedman gets the same critique and says “Shut the fuck up”. This difference illuminates a broader vibe shift in tech. After Facebook got critiqued in 2016 post-Trump, Zuck apologized for things Facebook didn’t even do. He did a cross country tour and tried to apologize his way into popularity. It didn’t work. After trying the beta way, he tried the alpha way. He got jacked, he got into Brazilian Jiu Jitsu, and he challenged Elon to a fight.

E/acc gets critiqued for being just about “vibes”, but there is some substance there too. If EA is more around tops-down planning and legibility, E/acc is about bottoms up, market-based adversarial competition as a check on centralized control.

E/acc is basically an impassioned plea to not have artificial monopolies either via the government or corporations through regulatory capture. Vitalik’s idea of D/acc is basically a fusion of E/acc and EA. It takes the E/acc approach of maximizing decentralization with the EA point of taking AI safety seriously.

It’s worth separating EA and E/acc from the AI ethics people who are concerned about what they see as the problems of capitalism: broader inequality and perpetuation of group disparities they don’t like. This is why Google Gemini didn’t show white people and why Open AI can’t say positive things about Trump or can’t even acknowledge that some people are more beautiful than others. AI Ethics people are concerned about misinformation and hate speech and the problems of capitalism. EAs and E/accs are not concerned about these problems. Which is why I think EAs and E/accs should band together and fight the AI ethics people, who’s concerns are much better appreciated by the regulators most likely to set strangling AI regulation. Instead, EA ends up being the baptists to AI ethics’ bootleggers. This of course won’t help the people who truly want to pause AI -- they’re happy with AI being paused even if for the wrong reasons. This is only for the AI safetyists who believe we can figure out alignment and don’t want to pause AI —the people working at Open AI, Anthropic, and the like.

It is my belief that EA and E/acc need to reconcile with each other since they both need each other to fight the AI ethics people. EA needs someone to defend it since it won’t defend itself. E/acc needs EA because it needs to be able to self-critique/self-regulate until it can figure out alignment. Both of them also need each other because they’re both easy prey on their own.

Me against my brother, me and my brother against my cousin — EA and E/acc against AI Ethics. Agree on 95% publicly to present a united front in denouncing other concerns that could prematurely strangle AI development (e.g. “bias”), disagree on the 5% privately so we can hash out the best approach to AI alignment. This is the way.

A really good piece. However, I found this baffling: "Like libertarians before them, EAs just want to make arguments, they don’t actually want to do the dirty work of self-defense." Libertarians are famously argumentative and not softies. Despite small numbers, they also engage in practical self-defense by funding and organizing policy foundations. They are limited in their means of retaliation and opposition because they oppose coercion. But I would hardly say they don't want to do the work of self-defense. If you mean that they don't push hard to take over academia and education, fair enough, but that's just as true of Republicans. And libertarians are such a small fraction of the population that such a move is unrealistic.

I think this is a more legitimate criticism of e/acc - https://thezvi.substack.com/p/based-beff-jezos-and-the-accelerationists

Can we live in a world with the burden of proof being on AI regulation but being thoughtful about the harm technology can do? As one critic of utilitarianism pointed out "What's the use of use?" Are we accelerating just for the sake of accelerating?

Always a fan of your writing.